ISO/IEC 42001: How xLM Operationalizes AI Governance

Discover how xLM operationalizes ISO/IEC 42001 to ensure AI governance, compliance, and trust in life sciences with unmatched precision and accountability.

share this

1.0. Introduction: Why AI Governance is Critical for Life Sciences

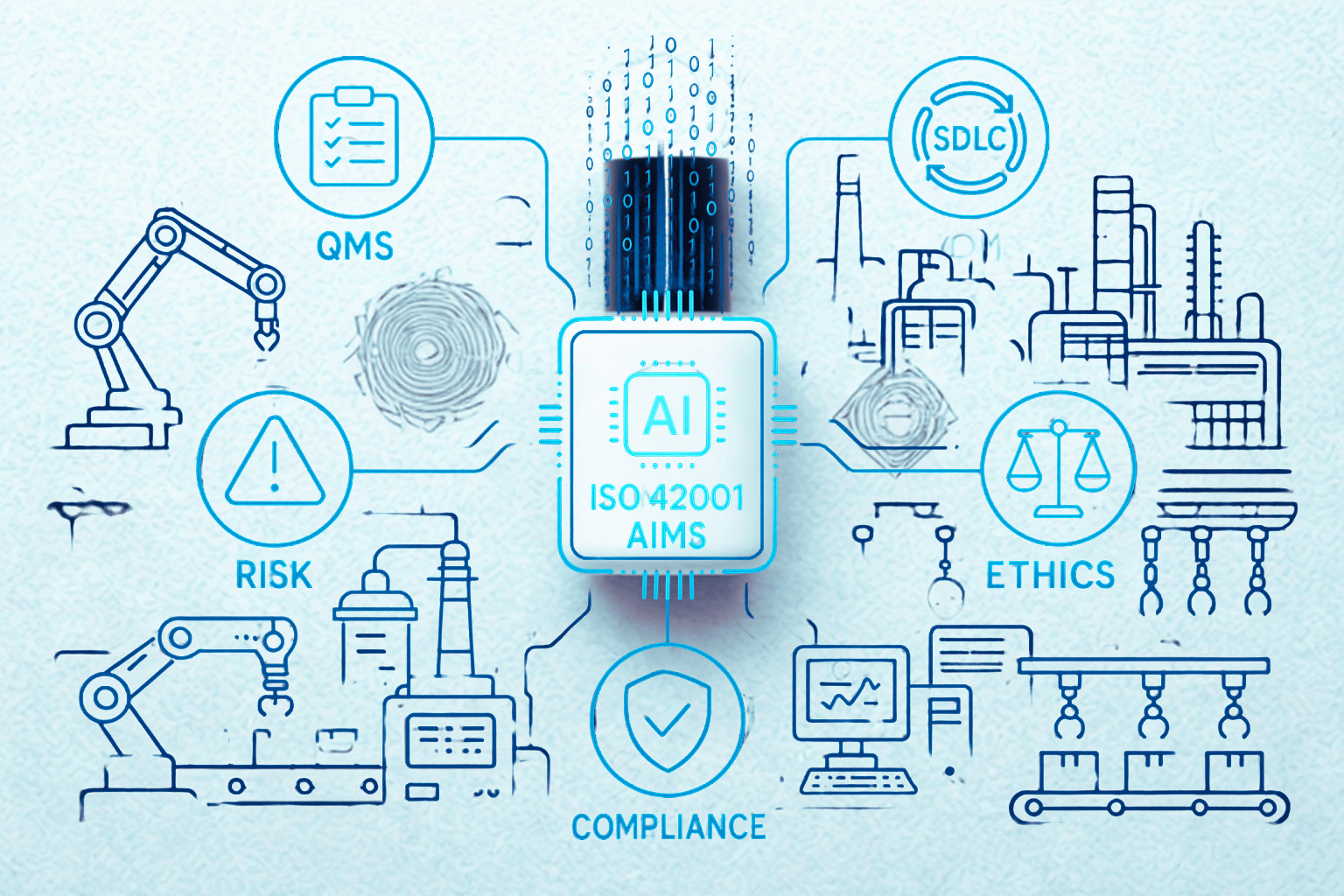

With the integration of AI in life sciences, effective governance has transitioned from being optional to absolutely essential. At xLM, we proudly lead the way in embedding AI trustworthiness into the core of our operations. By harmoniously integrating our Software Development Lifecycle (SDLC), Quality Management System (QMS), Change Control, Document Control, and Product Development policies, we ensure compliance with ISO/IEC 42001:2023—the first international standard for AI Management Systems (AIMS).

2.0. What Does ISO/IEC 42001:2023 Require?

ISO/IEC 42001 establishes a comprehensive AI governance framework for responsible AI management. It addresses:

- Ethical principles and risk management

- Security and human oversight

- Regulatory compliance and continuous monitoring

The standard is built on 10 management clauses and a set of Annex A controls, which together enable the trustworthy deployment of AI systems in regulated environments.

Let’s delve into how each of these elements is implemented and operationalized at xLM.

2.1. Scope, References, and Definitions (Clauses 1–3)

xLM Alignment:

- xLM has explicitly defined the scope and applicability of its AI Management System (AIMS) across all AI-enabled apps, including machine learning (ML) pipelines, robotic process automation (RPA) engines, and data intelligence systems.

- Our controlled vocabulary and internal glossaries ensure that all stakeholders share a common understanding of AI-related terminology, in accordance with Clause 3.

2.2. Context of the Organization (Clause 4)

xLM Alignment:

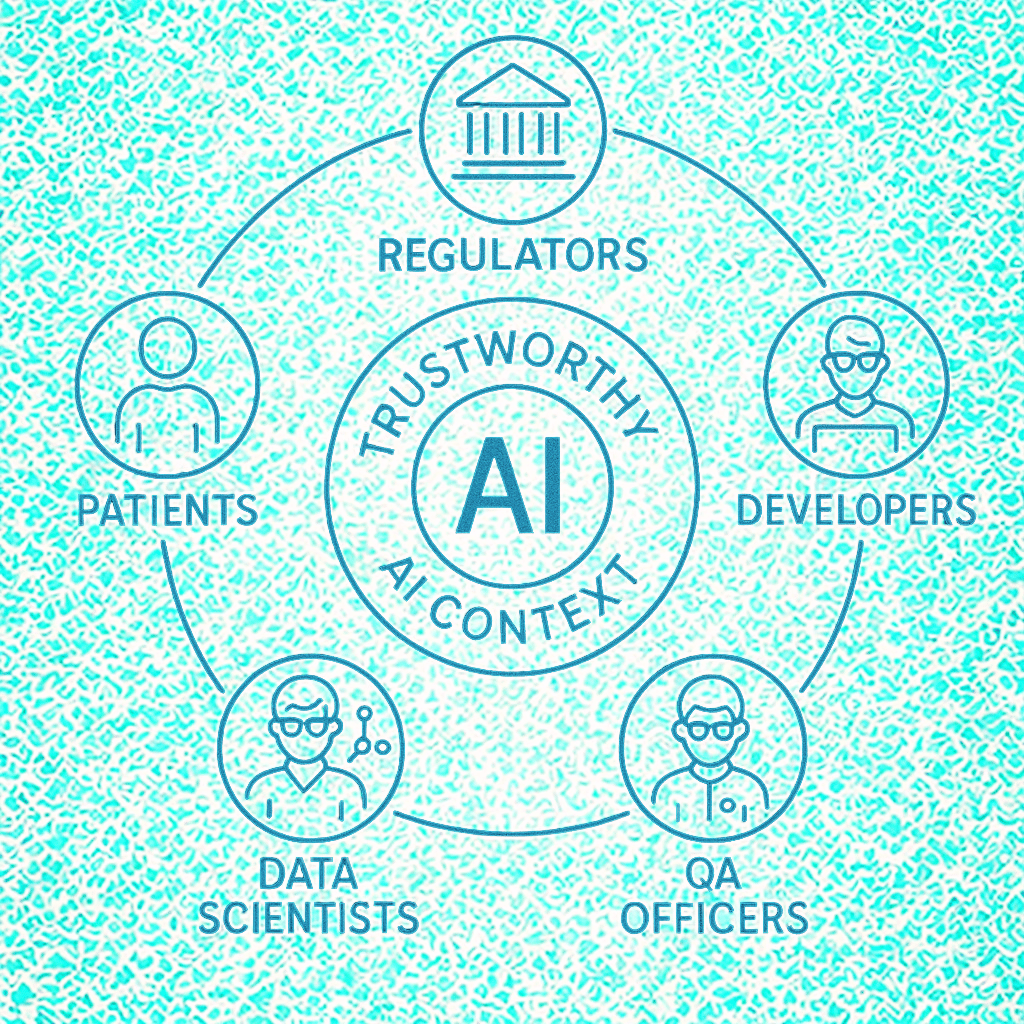

- Stakeholder mapping is integrated into our product development framework, ensuring that our AI systems meet regulatory, customer, and societal expectations.

- Both external drivers (e.g., FDA AI initiatives, EU AI Act) and internal factors (such as data lifecycle maturity and model risk classification) are evaluated during every strategic AI implementation.

2.3. Leadership (Clause 5)

xLM Implementation:

- Our executive steering group supervises all AI-driven initiatives, ensuring that ethical leadership, strategic risk priorities, and accountability are embedded within the decision-making framework.

- Responsibilities for AI risk ownership, ethical compliance, and model transparency are distinctly delineated in our governance matrix.

2.4. Planning (Clause 6)

xLM Implementation:

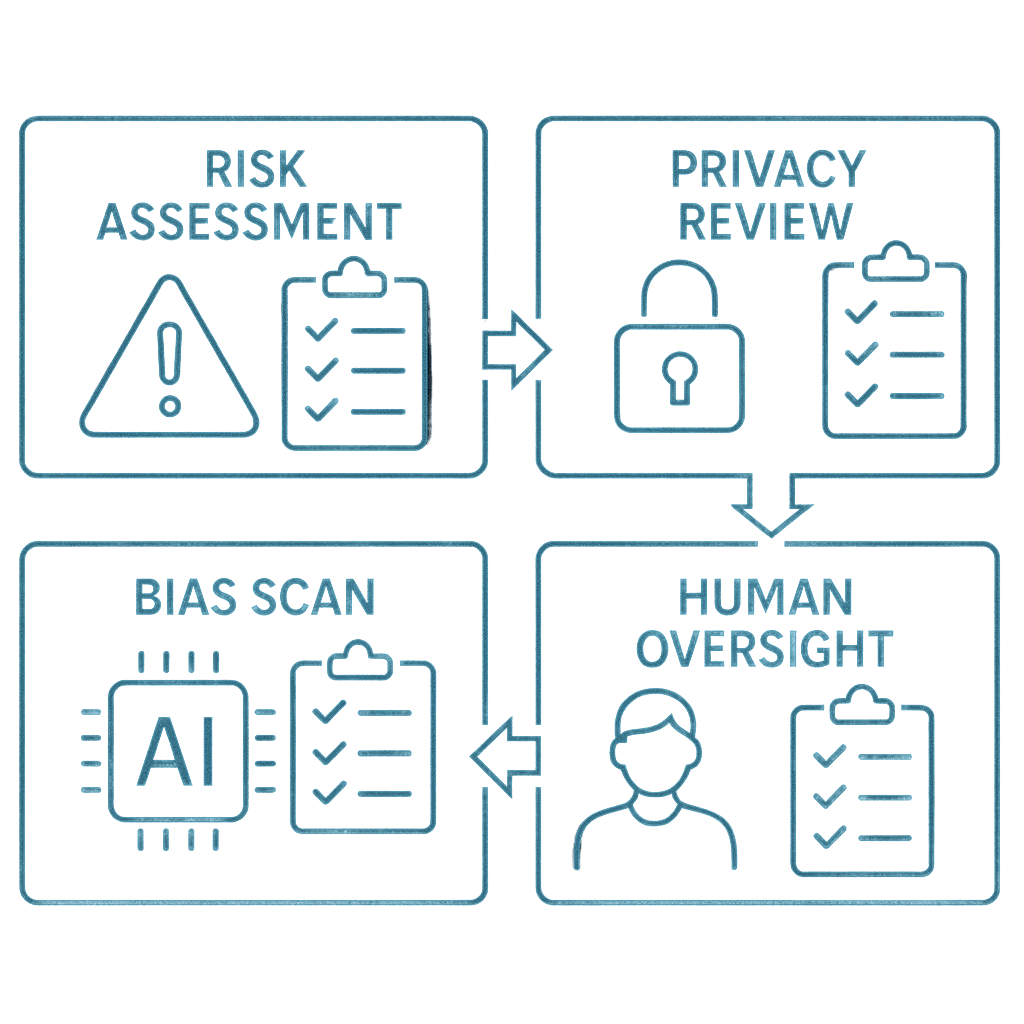

- Conducting risk and impact assessments is essential at every phase of the Software Development Life Cycle (SDLC), from model selection through to deployment.

- Our comprehensive AI Risk Register facilitates the proactive identification, evaluation, and mitigation of issues related to bias, fairness, privacy, and algorithmic harm.

2.5. Support (Clause 7)

Ensuring Capability:

- Our QMS training modules have been enhanced to include AI-specific topics such as explainability and dataset drift.

- The infrastructure provisioning SOP guarantees that AI systems are supported by robust computing resources, security measures, and comprehensive audit logging.

2.6. Operation (Clause 8)

Operationalizing AI Governance:

- Our Software Development Life Cycle (SDLC) incorporates controls for responsible model development, including version control, explainability documentation, and validation test plans.

- Change control SOPs mandate a review of AI-specific risks prior to any algorithmic updates or retraining events.

- Human-in-the-loop (HITL) reviews are required for all AI deployments classified as critical risk.

2.7. Performance Evaluation (Clause 9)

Ongoing Monitoring at xLM:

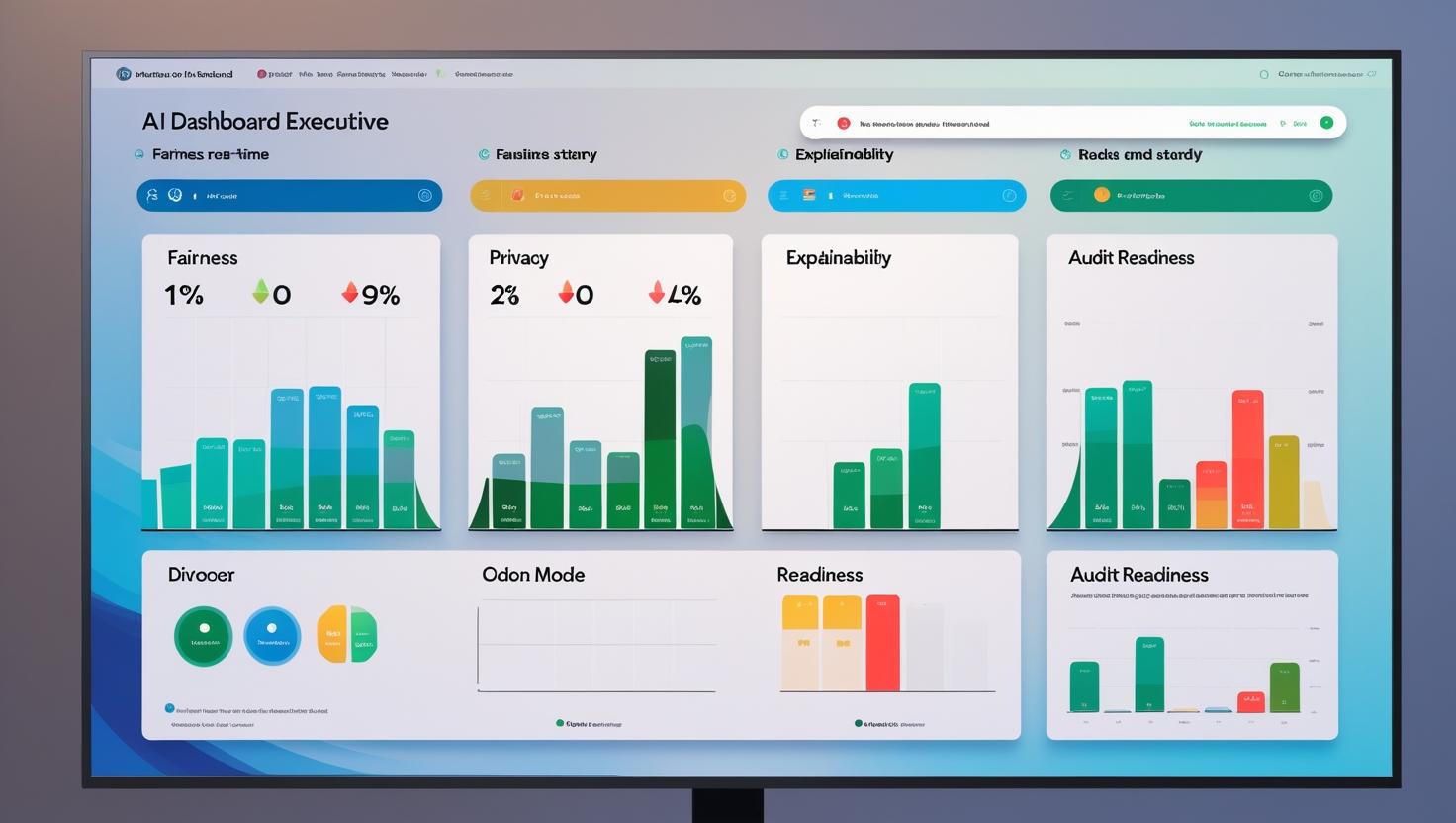

- We track AI KPIs, such as model accuracy drift, bias detection rates, and explainability coverage, through automated dashboards.

- Internal audits and management reviews encompass AI risk dashboards, regulatory audit readiness, and metrics on stakeholder satisfaction.

2.8. Improvement (Clause 10)

Leveraging Insights for Continuous Improvement:

- Non-conformities identified during validation or post-deployment incidents initiate root cause analysis and Corrective and Preventive Actions (CAPA), which contribute to AI model enhancements.

- Our AI governance council conducts quarterly reviews of metrics to guide platform-wide optimization efforts.

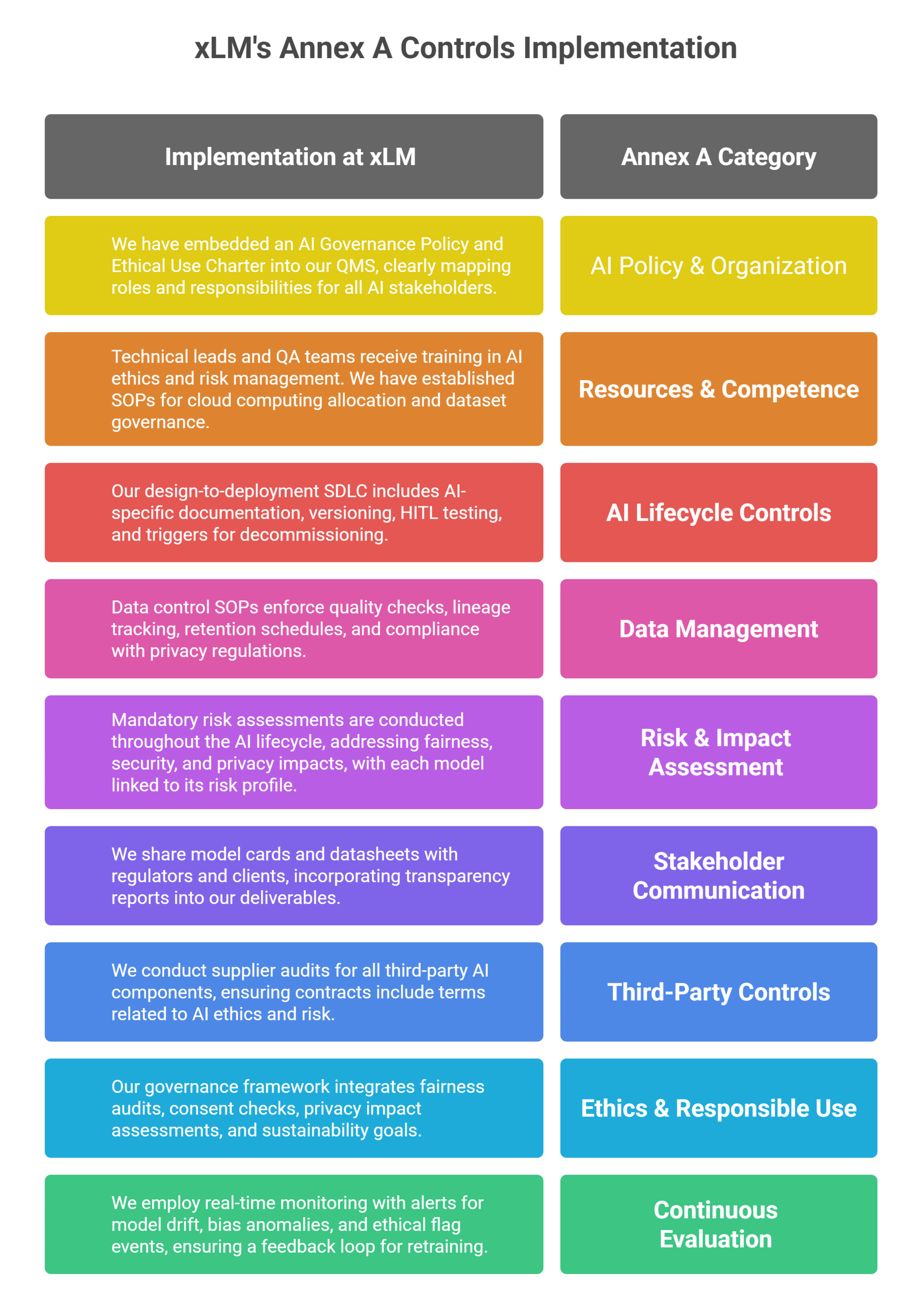

3.0. Annex A Controls: Deep Integration at xLM

Our controls extend beyond mere compliance. Here’s how we have operationalized each category of Annex A controls:

4.0. Why ISO/IEC 42001 Matters for Life Sciences

xLM’s alignment with ISO/IEC 42001 paves the way for a future where intelligent automation is governed with rigor—ensuring that AI solutions not only meet audit standards but also inspire confidence.

From algorithmic transparency in clinical manufacturing to risk-managed Robotic Process Automation (RPA) for Good Practice (GxP) operations, xLM is committed to ensuring that trust is built-in, not bolted on.

5.0. Key Takeaways

- Comprehensive Lifecycle Governance: AI is managed through established Standard Operating Procedures (SOPs) and review boards, ensuring oversight from inception to decommissioning.

- Explainable and Transparent AI: All stages of development incorporate model cards, impact assessments, and traceability to enhance understanding and accountability.

- Prepared for Audits and Ethical Standards: Our AI Management System (AIMS) facilitates quicker validation and responses to regulatory inspections.

- Compliance with Future Regulations: xLM is fully equipped to meet the FDA’s AI guidance, the EU AI Act, EU Annex 22 and other international standards.

share this